Abstract

We present a concise derivation for several influential score-based diffusion models that relies on only a few textbook results. Diffusion models have recently emerged as powerful tools for generating realistic, synthetic signals --- particularly natural images --- and often play a role in state-of-the-art algorithms for inverse problems in image processing. While these algorithms are often surprisingly simple, the theory behind them is not, and multiple complex theoretical justifications exist in the literature. Here, we provide a simple and largely self-contained theoretical justification for score-based diffusion models that is targeted towards the signal processing community. This approach leads to generic algorithmic templates for training and generating samples with diffusion models. We show that several influential diffusion models correspond to particular choices within these templates and demonstrate that alternative, more straightforward algorithmic choices can provide comparable results. This approach has the added benefit of enabling conditional sampling without any likelihood approximation.

Essential Theories in Score-Based Diffusion Models

1. MMSE Denoiser and Its Approximation via Deep Denoiser

Let \( \mathbf{X} \in \mathbb{R}^d \) be a clean signal and \( \mathbf{X}_\sigma = \mathbf{X} + \sigma \mathbf{n} \) be its noisy observation with Gaussian noise \( \mathbf{n} \sim \mathcal{N}(\mathbf{0}, \mathbf{I}) \). The minimum mean squared error (MMSE) estimator is defined as the conditional expectation:

A deep denoiser \( \mathsf{D}_{\theta} \), trained to minimize the mean squared error (MSE) between its output and the corresponding clean sample, approximates this MMSE estimator. As the training dataset grows, the denoiser converges to the optimal MMSE solution.

2. Connection Between Denoiser and Tweedie’s Formula

Tweedie’s formula establishes a connection between the score function of the noisy distribution \( f_{\mathbf{X}_\sigma} \) and the MMSE denoiser. Specifically, under Gaussian noise corruption, the score can be approximated as:

This identity enables the use of a learned denoiser to estimate the score function, providing a practical bridge between supervised denoising and score-based generative modeling.

3. Sampling with Score Using Random Walks

Langevin dynamics provide a way to sample from a distribution using only its score function. The discretized update rule is:

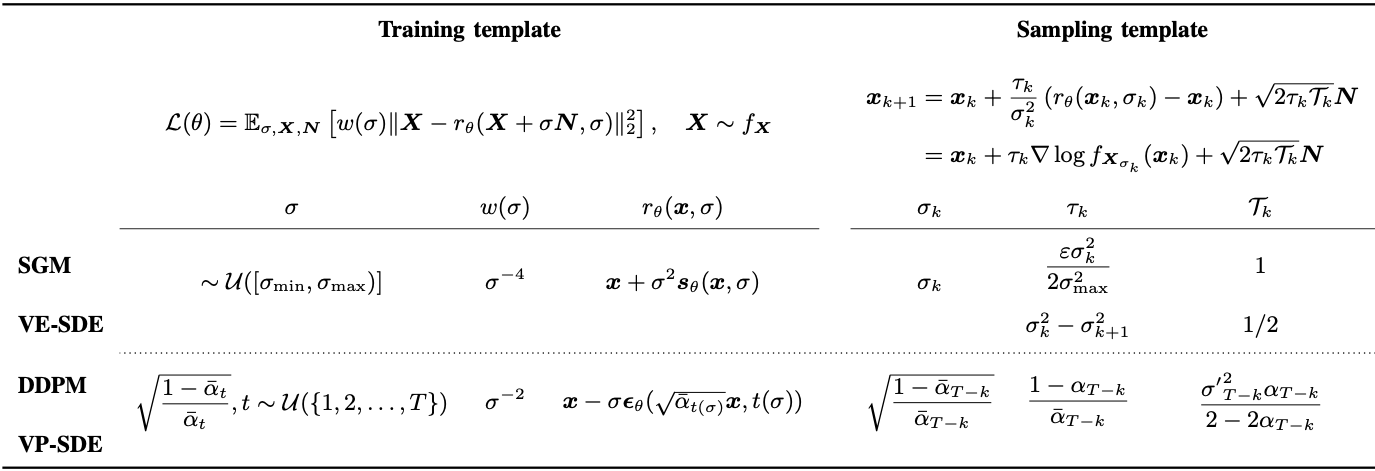

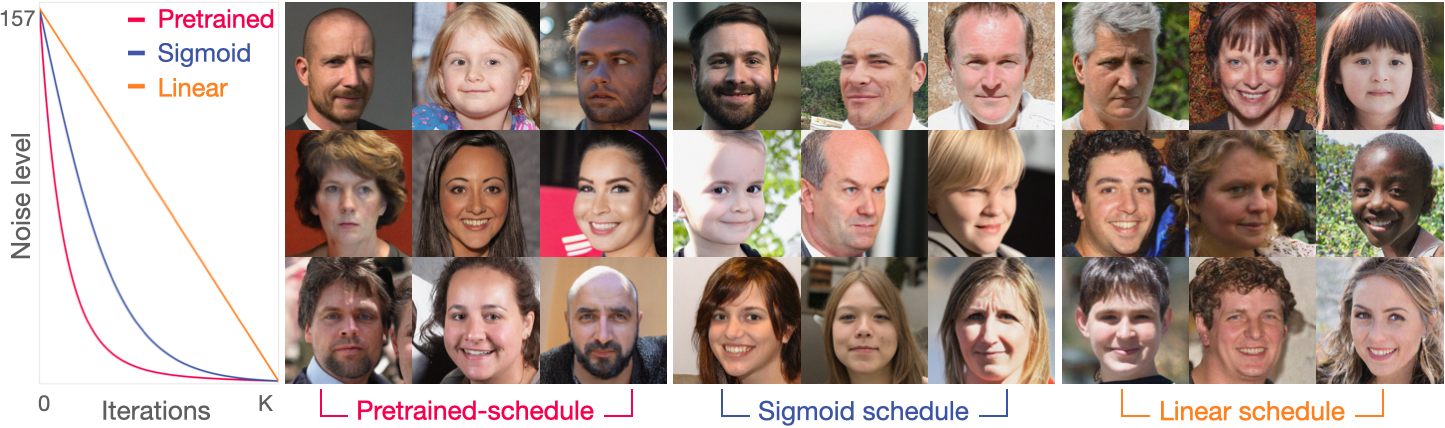

This defines a random walk guided by the score function, with step size \( \tau_k \) and temperature \( \mathcal{T}_k \). By choosing these parameters appropriately, many popular sampling algorithms such as NCSN [1], DDPM [2], and Score-SDE [3] can be recovered as special cases (see Table 1 for specific parameter choices). Beyond replicating these algorithms, our paper demonstrates that even straightforward choices of these configurable parameters can yield high-quality samples—without strictly adhering to algorithm-specific sampling rules. This highlights that diffusion sampling is more flexible than often assumed and, at its core, can be interpreted as a form of stochastic gradient ascent.

4. Cross-Compatible Use of Different Types of Score within Random Walks

The noise perturbation used in score-based models follows either a variance-exploding (VE) or variance-preserving (VP) formulation. In the VE case (e.g., NCSN), noise is added as:

while in the VP case (e.g., DDPM), the perturbation is:

These perturbations lead to different score functions, but they can be related by a unified form:

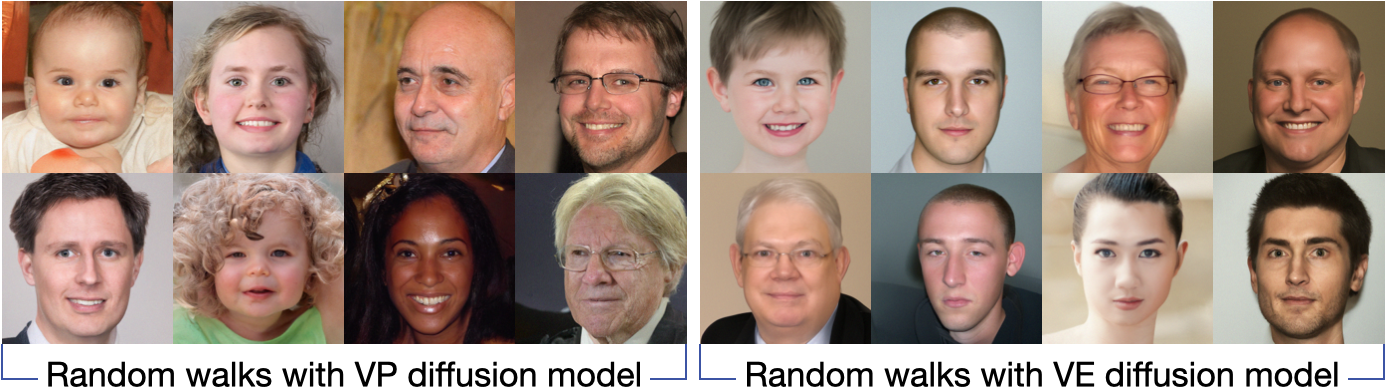

This compatibility enables the use of pretrained VE and VP models within a single random walk framework, enhancing the generality of our approach. As illustrated in Figure 2, samples generated using VE or VP scores remain visually consistent—even without using sampling algorithms specifically tailored to each case. This highlights the flexibility of our formulation and the robustness of its unified design.

5. Solving Inverse Problems with Learned Score-based Diffusion Priors

In inverse problems, the goal is to recover an unknown signal \( \mathbf{x} \in \mathbb{R}^d \) from degraded measurements \( \mathbf{y} = \mathbf{A} \mathbf{x} + \mathbf{e} \), where \( \mathbf{A} \) is a known forward operator and \( \mathbf{e} \sim \mathcal{N}(0, \eta^2 \mathbf{I}) \) models Gaussian noise. A principled solution is to sample from the posterior distribution \( f_{\mathbf{X} \mid \mathbf{Y}}(\mathbf{x} \mid \mathbf{y}) \propto f_{\mathbf{Y} \mid \mathbf{X}}(\mathbf{y} \mid \mathbf{x}) f_{\mathbf{X}}(\mathbf{x}) \), combining a learned prior with a known likelihood.

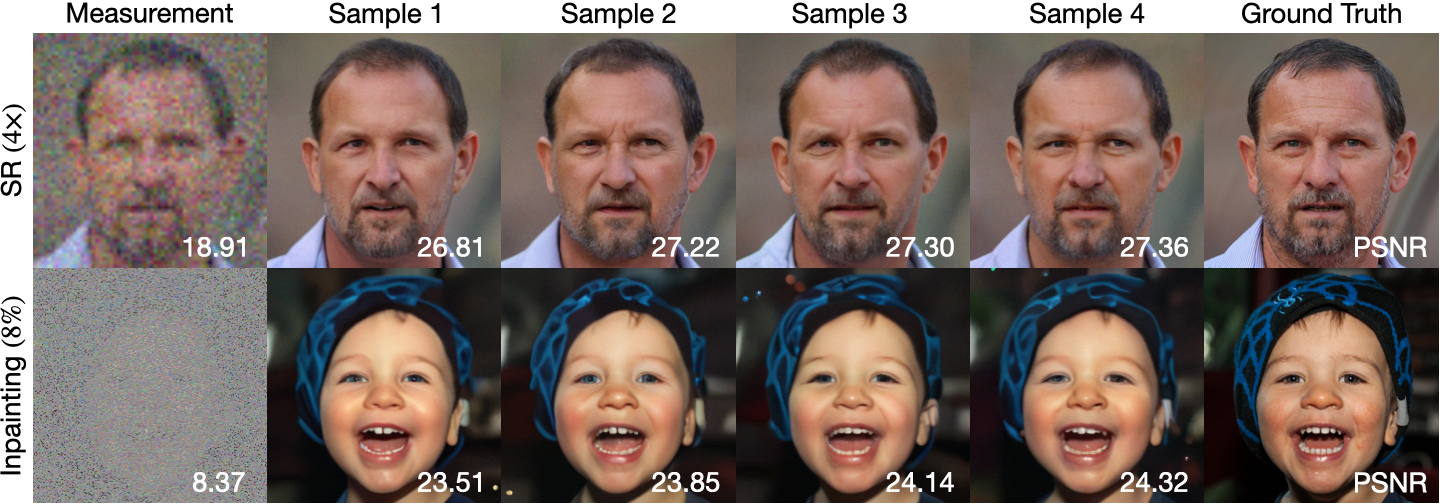

While many existing methods approximate the intractable gradient \( \nabla \log f_{\mathbf{Y} \mid \mathbf{X}_{\sigma_k}}(\mathbf{y} \mid \mathbf{x}_k) \), our framework bypasses this step by directly incorporating the known likelihood gradient \( \nabla \log f_{\mathbf{Y} \mid \mathbf{X}}(\mathbf{y} \mid \mathbf{x}) \). This yields a simple yet effective update rule:

As the noise level \( \sigma_k \to 0 \), the update rule asymptotically recovers the posterior score \( \nabla \log f_{\mathbf{X} \mid \mathbf{Y}}(\mathbf{x}) \), enabling direct posterior sampling.

Popular Sampling Algorithms as Parameterized Random Walks

Table 1: Proposed templates and parameter settings for common diffusion model algorithms, including the noise conditional score network (NCSN), the denoising diffusion probabilistic model (DDPM), and variance-exploding (VE) and variance-preserving (VP) diffusion models in SDE. The templates unify algorithmic configurations for sampling (specifying the noise level \(\sigma_k\), step size \(\tau_k\), and temperature parameter \(\mathcal{T}_k\;\)).

Random Walks Can Use Any Type of Score Functions

Figure 2: Unconditional image generation with VP and VE-score-based sequence of random walks. This figure illustrates the flexibility of our sequence of random walks framework, which enables unconditional sampling with the VE score while also being compatible with the VP score under a unified framework. This implies that our framework can use any type of score without restricting the score training scheme.

Random Walks Can Use Arbitrary Noise Level Schedule

Figure 1: Unconditional image generation using the proposed score-based random walk framework, which decouples training and sampling to enable flexible noise schedules, step sizes, and temperature settings.

Likelihood Approximation-Free Posterior Sampling with Random Walks

Figure 3: Conditional image sampling results with the score-based sequence of random walks with sigmoid noise scheduling. We conditionally sample four images under the same setup. This figure demonstrates that our simplified framework extends beyond unconditional image synthesis to effectively solve inverse problems.

References

- Y. Song and S. Ermon, “Generative modeling by estimating gradients of the data distribution,” in Advances in Neural Information Processing Systems, vol. 32, 2019.

- J. Ho, A. Jain, and P. Abbeel, “Denoising diffusion probabilistic models,” in Advances in Neural Information Processing Systems, vol. 33, 2020, pp. 6840–6851.

- Y. Song, J. Sohl-Dickstein, D. P. Kingma, A. Kumar, S. Ermon, and B. Poole, “Score-based generative modeling through stochastic differential equations,” in 9th International Conference on Learning Representations (ICLR), 2021.